BLIP is a new vision-language model using pre-trained vision and language models. It achieves state-of-the-art results in a range of vision-language tasks. For technical details, please visit its blog and paper. This post is focused on the understanding of its capability and applications in image annotation using spot checks.

Image annotation can be viewed as the process of adding textual description or metadata to an image. The metadata can include information about objects, topics, or features within the image. The annotations can then be used later for various tasks, such as image analysis, search, retrieval.

BLIP has an inference demo page. Using the demo, we evalauted its outputs on the below two tasks: 1) extract iamge description and metadata from a photo of cannon beach; 2) extract information from a utility bill.

A photo of Cannon Beach

Below is a photo of Cannon Beach in Oregon.

We first tried the image captioning from BLIP, and it looks quite good:

We then asked BLIP a list of questions about the photo.

- What are in the photo?

- Where is this place?

- How is the weather in the photo?

So far BLIP did an awesome job, and we were impressed that BLIP can understand the objects and weather in the photo well. We can definitely see BLIP will help with some of the image understanding projects. We decided to give BLIP some hint to see if it can guess where the photo was taken (Cannon beach in Oregon).

- Where in Oregon is this place?

The result is quite surprising and amusing. Our guess is that the model might be "hallucinating", which is a typical issue of large language model (LLM).

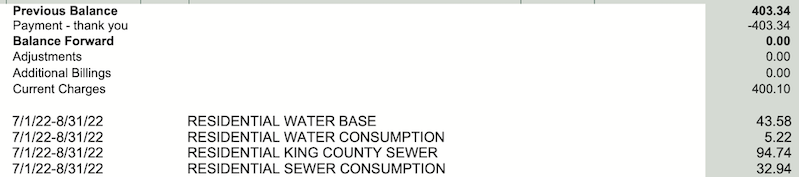

The screenshot of a utility bill

Optical Character Recognition (OCR)

We then tested if BLIP can understand the textual data in a utility bill.

The image caption generated by BLIP:

"Table" is accurate, but "food items" and "receipt" are not. This may be because BLIP noticed the visual similarity between the utility bill and receipt. It probably lacks the understanding of the textual data in the image.

Again, we asked several questions:

- What is this picture?

The screenshot does include date, so not very off.

- What is the billing cycle (since it reads "date and time")?

It's a bi-monthly cycle from 7/1 to 8/31. Quarterly is incorrect.

- What is the current charge?

The answer is hilarious and irrelavant to the image. The model is probably confused by the word "charge" having different meanings, and relies on the frequent co-occurence between "charge" and "110v".

- What is the charge for water consumption?

Again the answer is incorrect.

From the generated image caption and the answers to the above question, BLIP looks to have limited understanding of textual data in an image, which is probably because the image with heavy textual data is out of its training distribution, and meanwhile it may not directly use OCR to extract textual data from an image. One option for better annotation of images with majorly textual data is to extract the textual content using OCR, and then use LLM to get the annotations.

Summary

In this post, we evaluated the capability and perfomrance of using BLIP for image annotation with spot checks. BLIP has impressively good performance when answering questions related with an image of Cannon Beach, though with minor errors. For images with heavy textual data, BLIP shows a lack of understanding of the textual content, and provides incorrect or irrelevant answers. OCR and LLM can be a better option to handle images of text, which we will cover in a future post.